MOBILE WiMAX

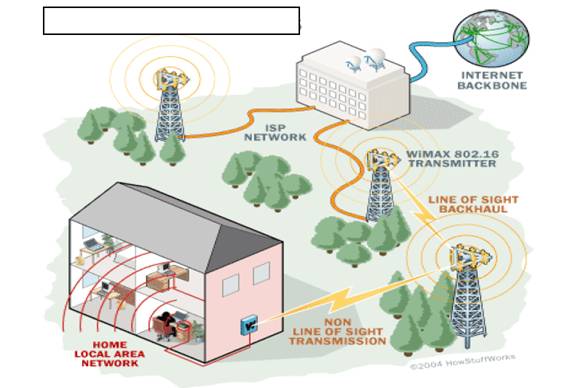

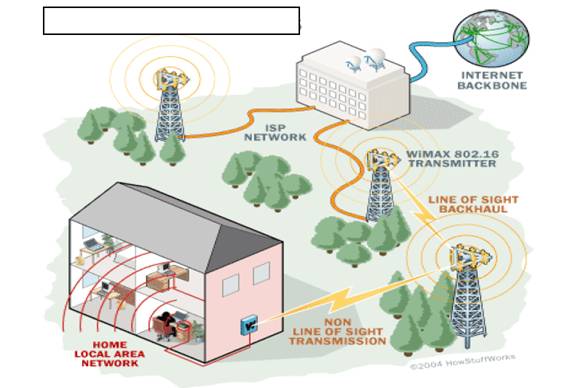

Mobile WiMax is a broadband wireless solution that enables convergence of mobile and fixed broadband networks through a common wide area broadband radio access technology and flexible network architecture. The Mobile WiMax Air Interface adopts Orthogonal Frequency Division Multiple Access (OFDMA) for improved multi-path performance in non line-of-sight environments. Scalable OFDMA (SOFDMA) is introduced in the IEEE 802.16eAmendment to support scalable channel bandwidths from 1.25 to 20 MHz.

The Mobile Technical Group (MTG) in the WiMax Forum is developing the Mobile WiMAX system profiles that will define the mandatory and optional features of the IEEE standard that are necessary to build a Mobile WiMax compliant air interface that can be certified by the WiMAX Forum. The Mobile WiMax System Profile enables mobile systems to be configured based on a common base feature set thus ensuring baseline functionality for terminals and base stations that are fully interoperable. Some elements of the base station profiles are specified as optional to provide additional flexibility for deployment based on specific deployment scenarios that may require different configurations that are either capacity-optimized or coverage-optimized

Introduction of Mobile WiMax

Release-1 Mobile WiMax profiles will cover 5,7,

8.75, and 10 MHz channel bandwidths for licensed worldwide spectrum

allocations in the2.3 GHz, 2.5 GHz, and 3.5 GHz frequency bands.

Mobile WiMax systems offer scalability in both radio access technology and network architecture, thus providing a great deal of flexibility in network deployment options and service offerings. Some of the salient features supported by Mobile WiMax are:

• High Data Rates. The inclusion of MIMO (Multiple Input Multiple Output) antenna techniques along with flexible sub-channelization schemes, Advanced Coding and Modulation all enable the Mobile WiMax technology to support peak DL data rates up to 63Mbps per sector and peak UL data rates up to 28 Mbps per sector in a 10 MHz channel.

• Quality of Service (QoS). The fundamental premise of the IEEE 802.16 MAC architecture is QoS. It defines Service Flows which can map to Diff Serv code points that enable end-to end IP based QoS. Additionally, sub channelization schemes provide a flexible mechanism for optimal scheduling of space, frequency and time resources over the air interface on a frame by-frame basis.

• Scalability . Despite an increasingly globalize economy, spectrum resources for wireless broadband worldwide are still quite disparate in its allocations. Mobile WiMax technology therefore, is designed to be able to scale to work in different canalizations from 1.25 to 20 MHz to comply with varied worldwide requirements as efforts proceed to achieve spectrum harmonization in the longer term. This also allows diverse economies to realize the multifaceted benefits of the Mobile WiMax technology for their specific geographic needs such as providing affordable internet access in rural settings versus enhancing the capacity of mobile broadband access in metro and suburban areas.

• Security. Support for a diverse set of user credentials exists including; SIM/USIM cards, Smart Cards, Digital Certificates, and Username/Password schemes.

Mobility. Mobile WiMax supports optimized handover schemes with latencies less than 50milliseconds to ensure real-time applications such as VoIP perform without service degradation. Flexible key management schemes assure that security is maintained during handover

WiMax must be able to provide a reliable service over long

distances to customers using indoor terminals or PC cards (like today's

WLAN cards). These requirements, with limited transmit power to comply

with health requirements, will limit the link budget. Sub channeling in

uplink and smart antennas at the base station has to overcome these

constraints. The WiMax system relies on a new radio physical (PHY) layer

and appropriate MAC (Media Access Controller) layer to support all

demands driven by the target applications. Mobile WiMax systems offer scalability in both radio access technology and network architecture, thus providing a great deal of flexibility in network deployment options and service offerings. Some of the salient features supported by Mobile WiMax are:

• High Data Rates. The inclusion of MIMO (Multiple Input Multiple Output) antenna techniques along with flexible sub-channelization schemes, Advanced Coding and Modulation all enable the Mobile WiMax technology to support peak DL data rates up to 63Mbps per sector and peak UL data rates up to 28 Mbps per sector in a 10 MHz channel.

• Quality of Service (QoS). The fundamental premise of the IEEE 802.16 MAC architecture is QoS. It defines Service Flows which can map to Diff Serv code points that enable end-to end IP based QoS. Additionally, sub channelization schemes provide a flexible mechanism for optimal scheduling of space, frequency and time resources over the air interface on a frame by-frame basis.

• Scalability . Despite an increasingly globalize economy, spectrum resources for wireless broadband worldwide are still quite disparate in its allocations. Mobile WiMax technology therefore, is designed to be able to scale to work in different canalizations from 1.25 to 20 MHz to comply with varied worldwide requirements as efforts proceed to achieve spectrum harmonization in the longer term. This also allows diverse economies to realize the multifaceted benefits of the Mobile WiMax technology for their specific geographic needs such as providing affordable internet access in rural settings versus enhancing the capacity of mobile broadband access in metro and suburban areas.

• Security. Support for a diverse set of user credentials exists including; SIM/USIM cards, Smart Cards, Digital Certificates, and Username/Password schemes.

Mobility. Mobile WiMax supports optimized handover schemes with latencies less than 50milliseconds to ensure real-time applications such as VoIP perform without service degradation. Flexible key management schemes assure that security is maintained during handover

Physical Layer Description :

The PHY layer modulation is based on OFDMA, in combination with a centralized MAC layer for optimized resource allocation and support of QoS for different types of services(VoIP, real-time and non real-time services, best effort). The OFDMA PHY layer is well adapted to the NLOS propagation environment in the 2 - 11 GHz frequency range.

It is inherently robust when it comes to handling the significant delay spread caused by the typical NLOS reflections. Together with adaptive modulation, which is applied to each subscriber individually according to the radio channel capability, OFDMA can provide a high spectral efficiency of about 3 - 4 bit/s/Hz. However, in contrast to single carrier modulation, the OFDMA signal has an increased peak: average ratio and increased frequency accuracy requirements. Therefore, selection of appropriate power amplifiers and frequency recovery concepts are crucial. WiMax provides flexibility in terms of channelization, carrier frequency, and duplex mode (TDD and FDD) to meet a variety of requirements for available spectrum resources and targeted services.